Backpropagation is the main algorithm used for training neural networks with hidden layers. The main idea is that you can calculate an estimate for how much the error in the output node is based on the errors in the weights of the node before it.

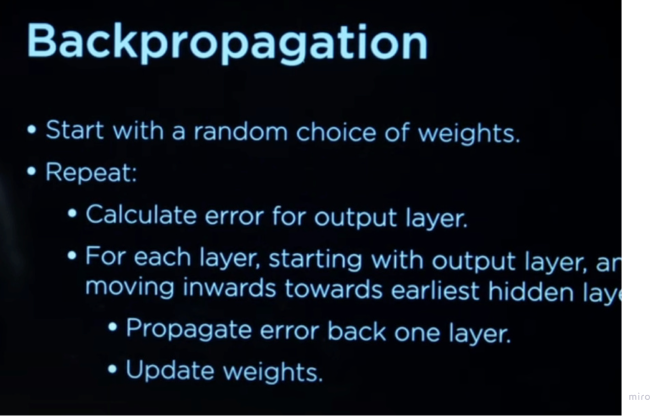

It does so by:

- Starting with the error in the output layer, calculate the gradient descent for the weights of the previous layer

- Propagate error back one layer. Update weights

- Repeat this process until the input layer is reached.

Intuition

- Backprop is “[[Chain Rule of Calculus]]” at scale.

- A neural net is a long chain of differentiable operations ([[Dot Products]] + bias, activation, more [[Layers]], then a [[Loss]]).

- To update a weight, we need to ask “if I nudge this weight a tiny bit, how much does the final loss change?”. That’s basically what Chain Rule is meant to help with.

- The “shape” of backprop is local derivative × local derivative × local derivative x ..

Another way to think about it: Think of the forward pass as a graph of operations. Backprop sends a single number backwards:

- Each node receives an upstream gradient (how much the loss changes if this node changes).

- The node multiplies by its local derivative.

- It passes that product to its inputs.

That is just the chain rule applied repeatedly.

Complex Stuff

- Why Machines Learn, Chapter 3, Page 88-94 describe the curious connection of Backprop with the LMS (Least Mean Squares) algorithm by Widrow and Hoff in 1959. In LMS, instead of calculating the entire gradient they decided to calculate only an estimate of it based on just one data point. Estimating a statistical parameter based on one sample is anathema but they went with it. They squared a single value of the error, squared it, then took the derivative of it without differentiating. It was an extremely noisy gradient based on an extremely noisy error. Yet LMS gets close to the minimum of the function because “by making the steps small, and having lots of them, we are getting the averaging effect that takes you down to the bottom of the bowl”.

- The process of Backpropagation allows us “to calculate the derivatives backwards down any ordered table of operations, as long as the operations correspond to differentiable functions”. That final part is key – every link in that chain should be able to give us the functions derivative. (See Page 337 in Why Machines Learn).

- Breaking the symmetry problem was done by two things: 1. Using sigmoid as activation function instead of binary function, 2. Using random initial weights instead of the same

Primary Resources

- Chapter 10 in Why Machines Learn is all about Backprop. Good overview for intuition.

- 3Blue1Brown’s Neural Networks course has Chapter 5 Backpropagation Calculus. It’s a good primer but math-heavy.