Eg. Dropout

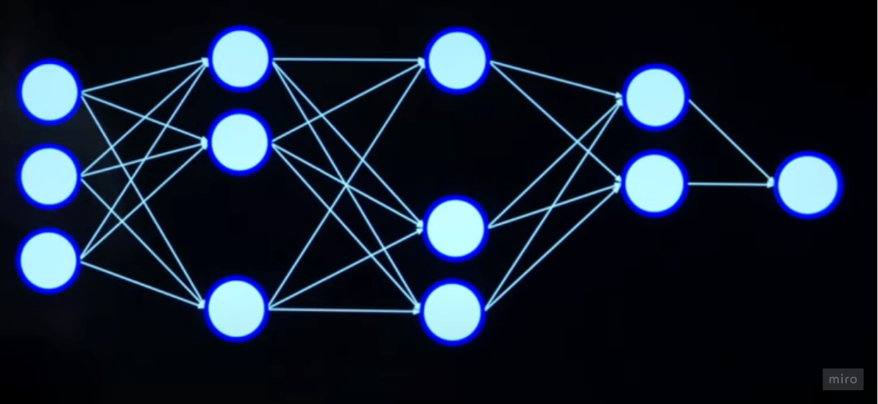

Randomly and temporarily remove/shutdown some of the interstitial neurons, so that the weights only flow through a subset. This builds new-paths ways and forces the neural network to not depend on any particular neurons. Do it repeatedly, each time removing different set of neurons.

Eg. Early Stopping

Stop when the test loss has plateaued, just before it starts increasing again. Training loss will keep decreasing (overfitting)